In today’s fast-paced digital landscape, organizations face constant pressure to make informed decisions that drive growth and optimize performance. A/B testing and experimentation are powerful tools that empower businesses to make data-driven decisions with confidence. By testing hypotheses, analyzing results, and iterating based on insights, companies can enhance user experiences, improve conversion rates, and maximize ROI.

What is A/B Testing?

A/B testing, also known as split testing, is a controlled experiment that compares two or more versions of a webpage, app feature, or marketing campaign to determine which performs better. The goal is to identify changes that lead to better outcomes, such as higher engagement, increased sales, or improved retention rates.

For instance, a company might test two versions of a landing page: one with a green call-to-action (CTA) button and another with a blue one. By randomly assigning users to each version and analyzing their behavior, the company can decide which CTA color drives more conversions.

Why is A/B Testing Important?

- Informed Decision-Making: A/B testing eliminates guesswork by providing empirical evidence to back decisions. Instead of relying on intuition, businesses can make changes based on real user data.

- Improved User Experience: By identifying what resonates with users, companies can tailor experiences to meet their needs and preferences.

- Maximized ROI: Small, incremental improvements through testing can significantly impact revenue and efficiency over time.

- Risk Mitigation: Testing changes on a small scale before a full rollout minimizes the risk of implementing ineffective or harmful updates.

The A/B Testing Process

To run a successful A/B test, follow these steps:

1. Define Your Objective

Clearly outline the goal of your test. Are you looking to increase click-through rates, improve user engagement, or reduce bounce rates? A well-defined objective sets the foundation for a meaningful experiment.

2. Develop a Hypothesis

Formulate a hypothesis based on existing data or user feedback. For example, “We believe that simplifying the checkout process will reduce cart abandonment.”

3. Identify Variables

Determine the variable you want to test (independent variable) and the metric you will measure (dependent variable). Examples include:

- Independent variable: CTA color

- Dependent variable: Conversion rate

4. Create Test Variants

Design and implement the different versions to be tested. Ensure that the changes are isolated to a single variable to avoid confounding results.

5. Randomize and Split Traffic

Use tools like Google Optimize, Optimizely, or Adobe Target to randomly assign users to each version. Equal distribution ensures unbiased results.

6. Run the Experiment

Allow the test to run for a statistically significant period to collect sufficient data. Premature conclusions can lead to inaccurate insights.

7. Analyze Results

Evaluate the performance of each variant using statistical methods. Metrics like conversion rate, average session duration, or click-through rate are commonly used to measure success.

8. Implement and Iterate

Deploy the winning variant and continue testing new hypotheses. A/B testing is an ongoing process of optimization.

Common A/B Testing Mistakes to Avoid

- Testing Without a Clear Hypothesis: Running tests without a specific question or goal leads to unfocused efforts and unclear results.

- Insufficient Sample Size: Ending tests prematurely or with too few participants can yield misleading outcomes. Ensure your sample size is statistically significant.

- Ignoring External Factors: Seasonal trends, marketing campaigns, or technical issues can skew results. Account for these factors when analyzing data.

- Overcomplicating Tests: Testing multiple variables simultaneously (multivariate testing) is useful but can complicate analysis. Start with simple A/B tests to build confidence.

- Relying Solely on Statistical Significance: While statistical significance is crucial, consider practical significance. Even small improvements can have a meaningful impact.

Tools for A/B Testing and Experimentation

Numerous tools are available to help businesses implement A/B testing and experimentation effectively. Here are some popular options:

- Google Optimize: A free tool for website and app testing, integrated with Google Analytics.

- Optimizely: A comprehensive platform offering experimentation for web, mobile, and server-side testing.

- Adobe Target: A robust solution for personalization and A/B testing, ideal for enterprise-level businesses.

- VWO (Visual Website Optimizer): A user-friendly tool with features for A/B testing, heatmaps, and user behavior analysis.

- Crazy Egg: Focused on visual insights, such as click tracking and scroll maps, to complement A/B tests.

A/B Testing in Different Industries

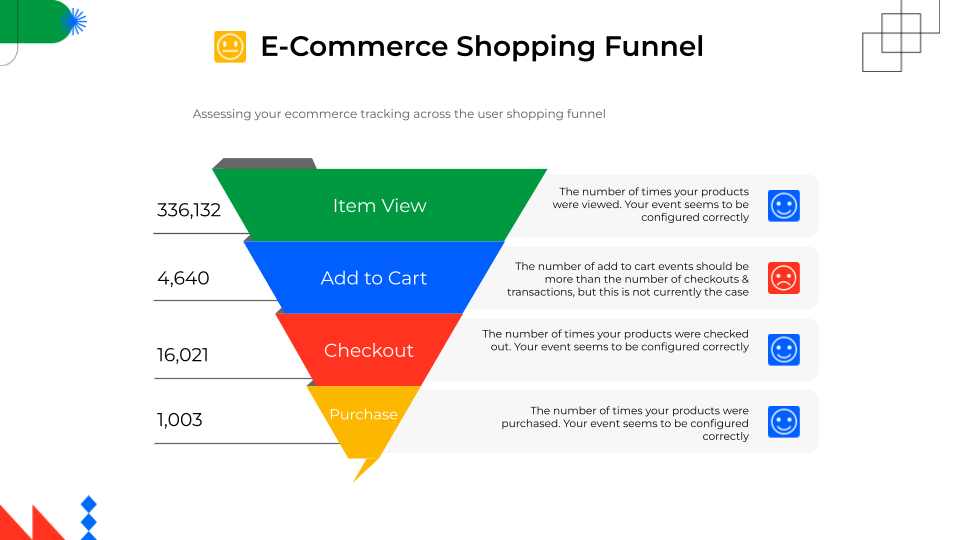

1. E-Commerce

- Testing product descriptions, images, and pricing strategies to boost sales.

- Optimizing checkout processes to reduce cart abandonment.

2. SaaS (Software as a Service)

- Experimenting with onboarding flows to increase user activation.

- Testing subscription pricing models to maximize customer lifetime value.

3. Media and Publishing

- Optimizing headlines and content layouts to increase reader engagement.

- Testing ad placements to balance user experience and revenue.

4. Travel and Hospitality

- Testing booking flows to improve conversions.

- Experimenting with loyalty program designs to enhance customer retention.

Case Study: A/B Testing in Action

A leading e-commerce company wanted to increase the conversion rate on their product pages. They hypothesized that adding customer reviews would build trust and drive purchases. An A/B test was conducted with two versions:

- Variant A: Product pages without customer reviews.

- Variant B: Product pages with customer reviews displayed prominently.

After running the test for two weeks with a statistically significant sample size, Variant B outperformed Variant A with a 15% higher conversion rate. The company implemented the change site-wide, resulting in a substantial revenue boost.

The Future of A/B Testing and Experimentation

As technology evolves, A/B testing and experimentation are becoming more sophisticated. AI and machine learning are enabling automated experimentation, predictive analytics, and personalized experiences at scale. Moreover, advancements in data visualization and analysis tools are making it easier for teams to interpret results and act swiftly.

Conclusion

A/B testing and experimentation are invaluable for making data-driven decisions that drive growth and improve user experiences. By following a structured approach, avoiding common pitfalls, and leveraging the right tools, businesses can unlock actionable insights and stay ahead in competitive markets. Embrace the power of experimentation to refine strategies, meet user needs, and achieve measurable success.